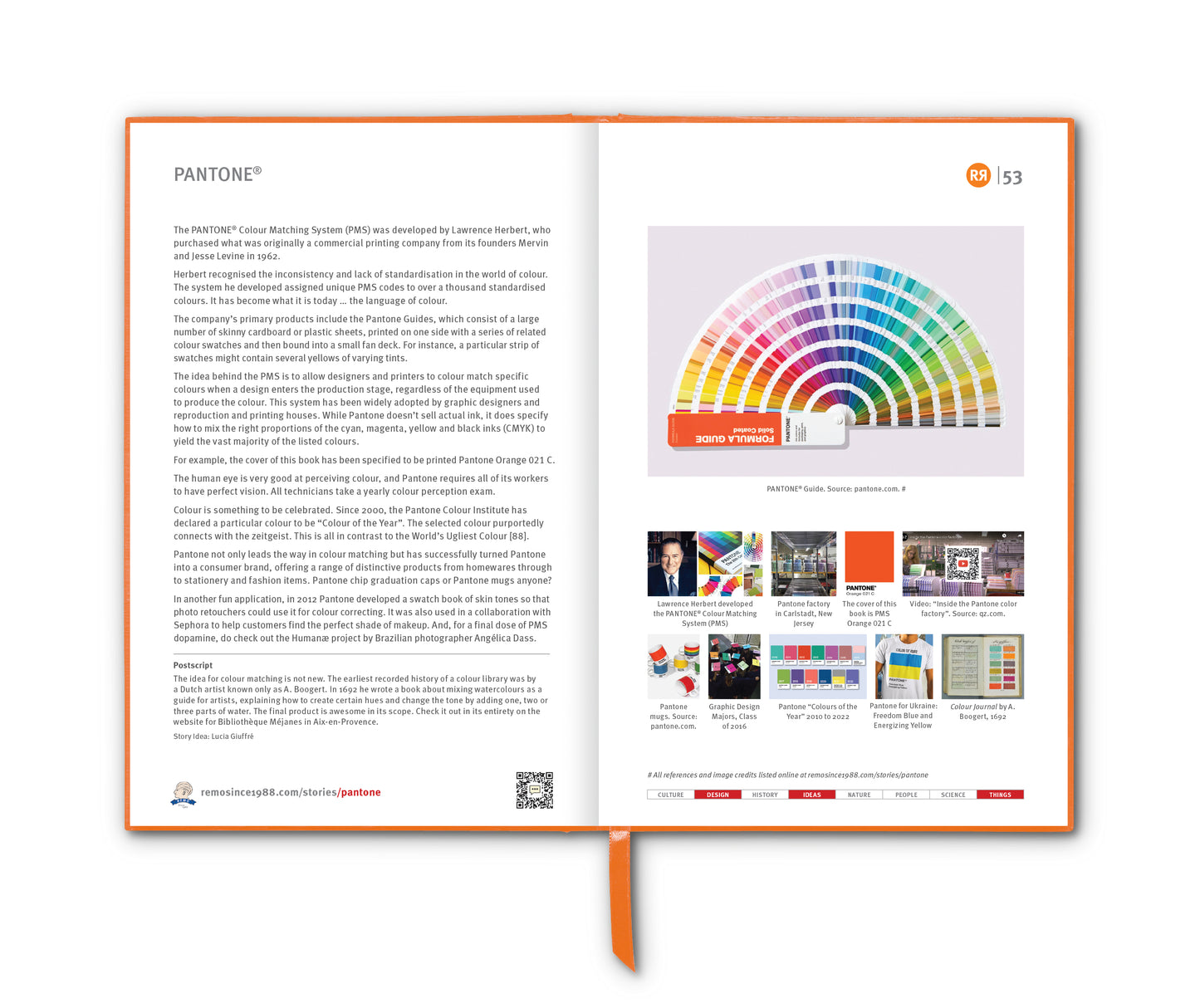

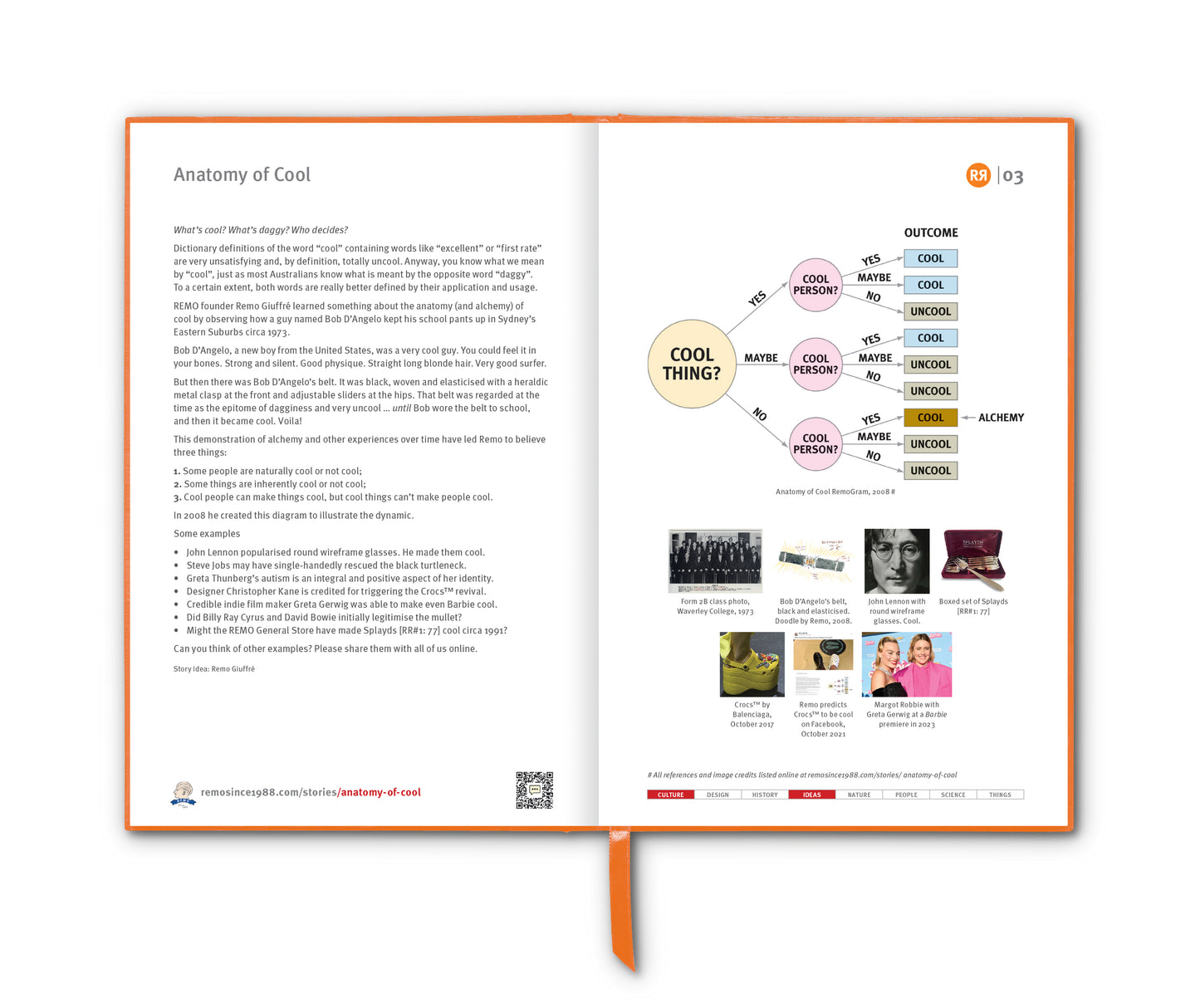

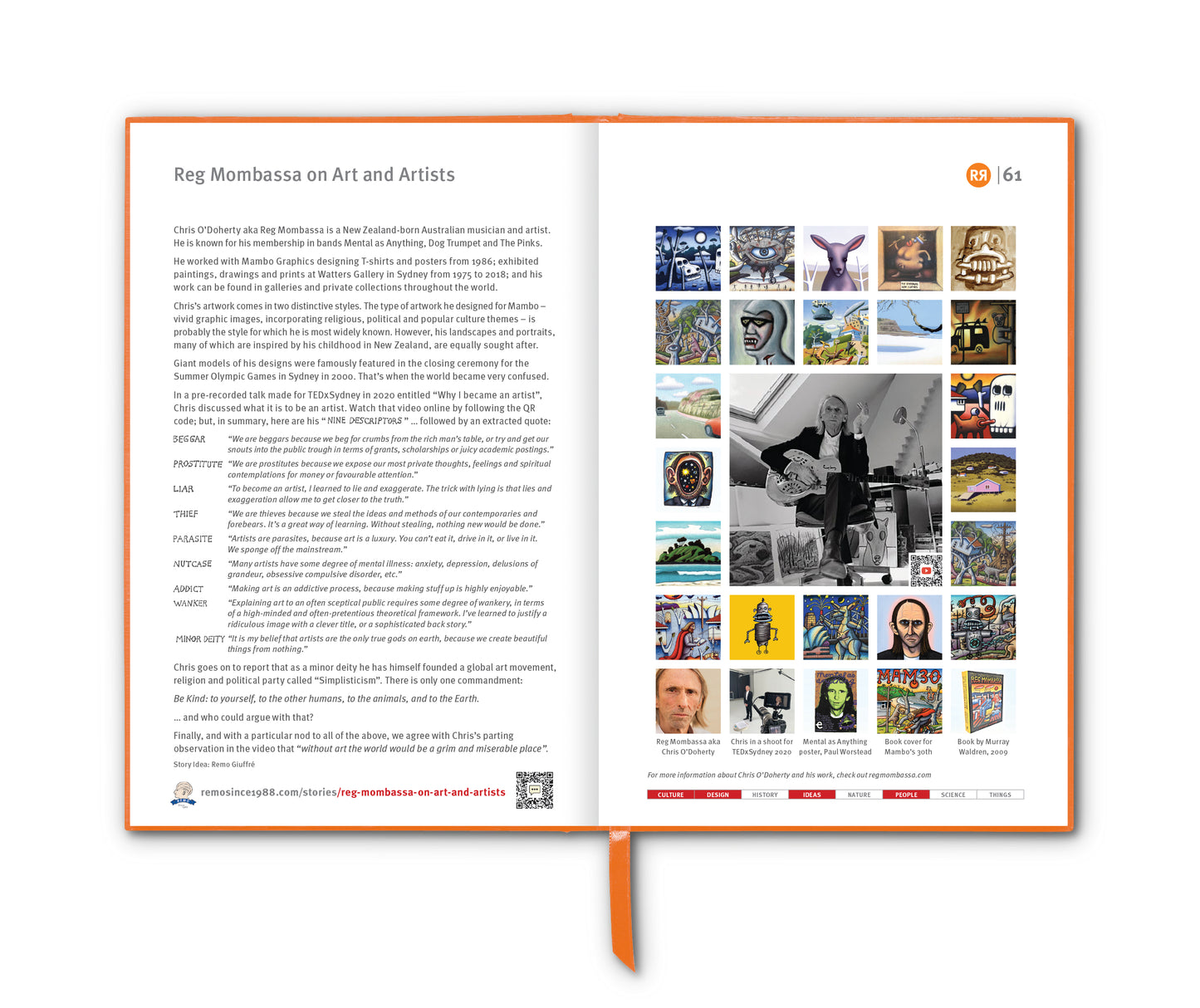

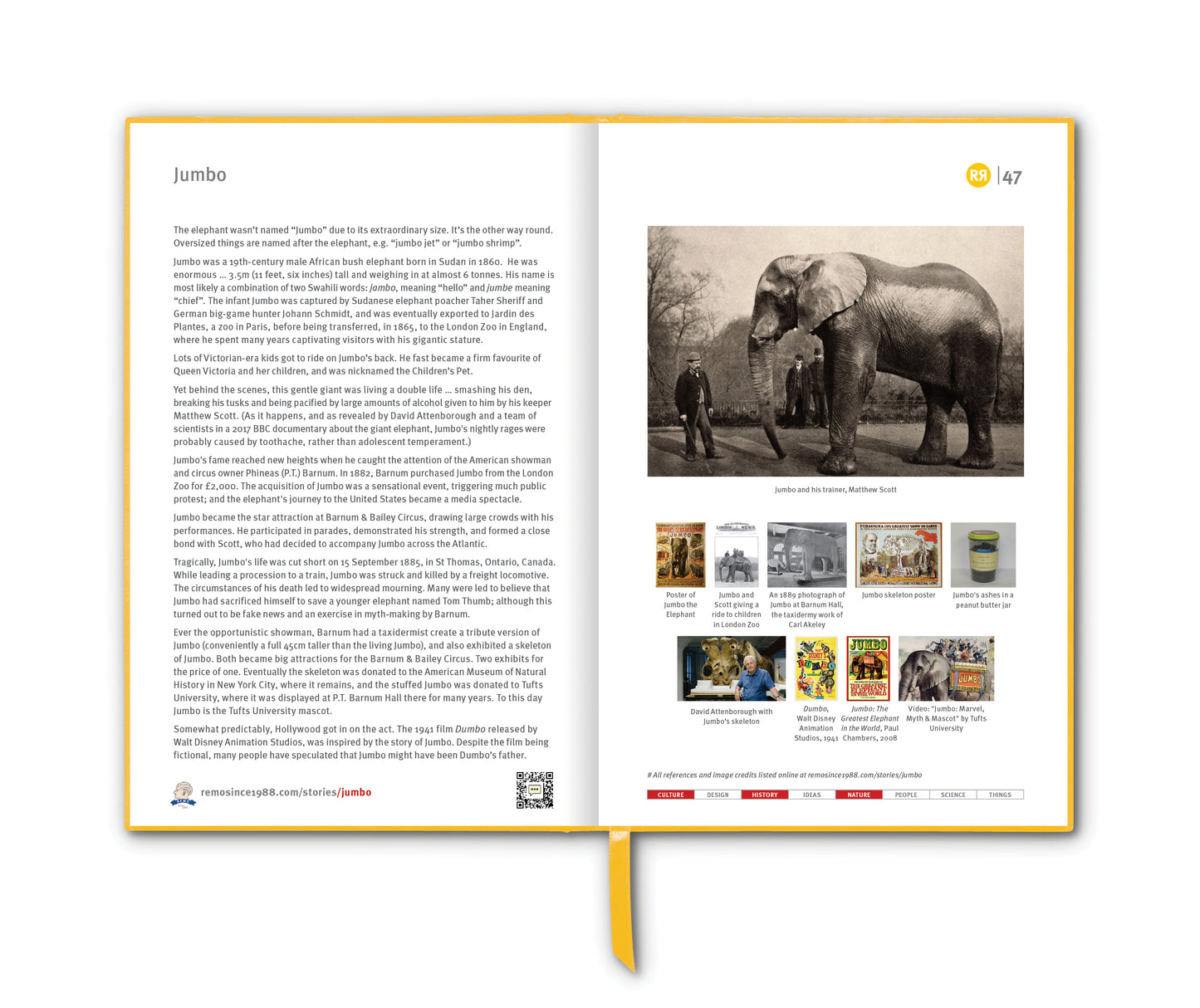

The filter bubble is a concept that describes the personalised environment created by algorithms on social media platforms, search engines and other digital services.

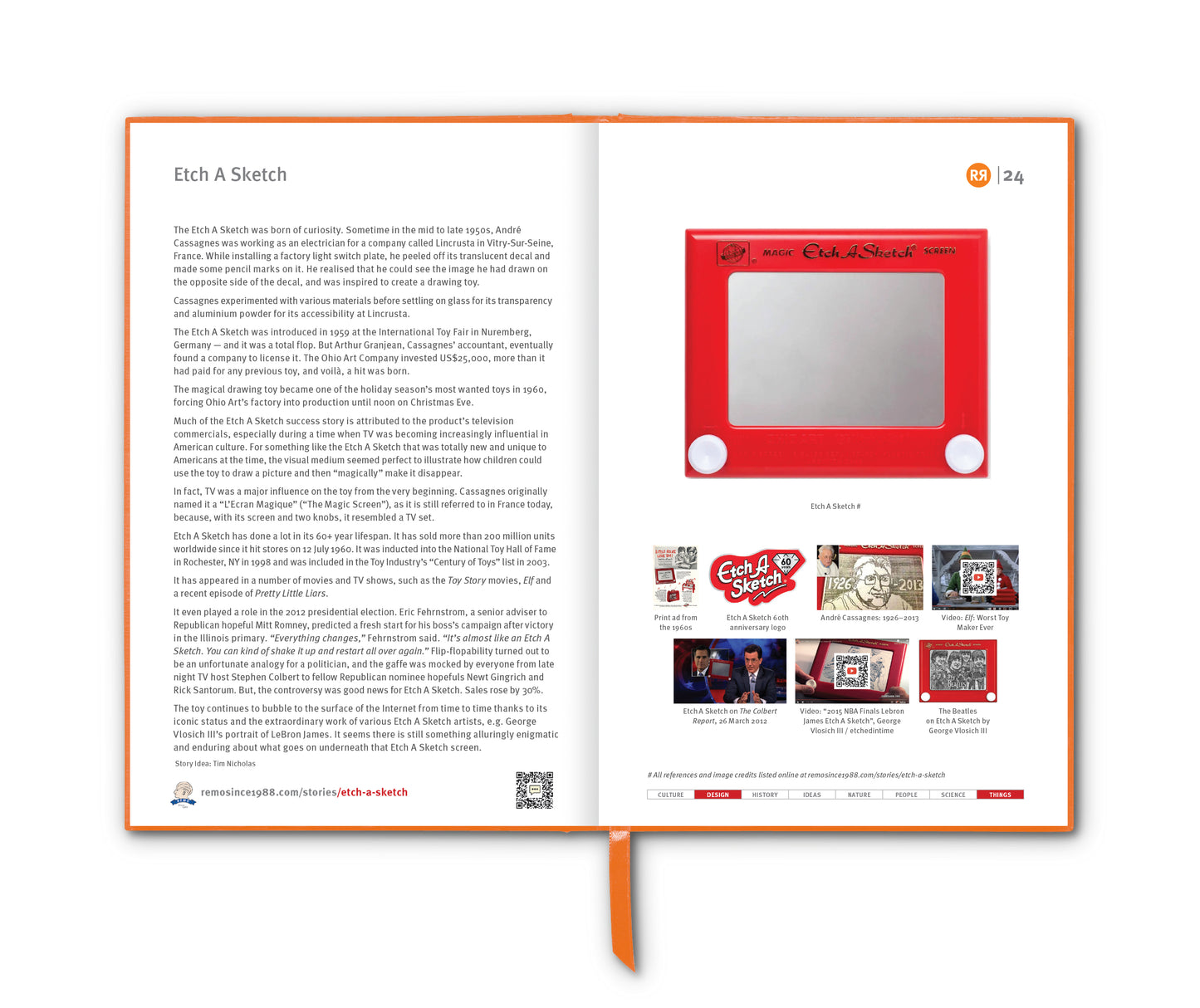

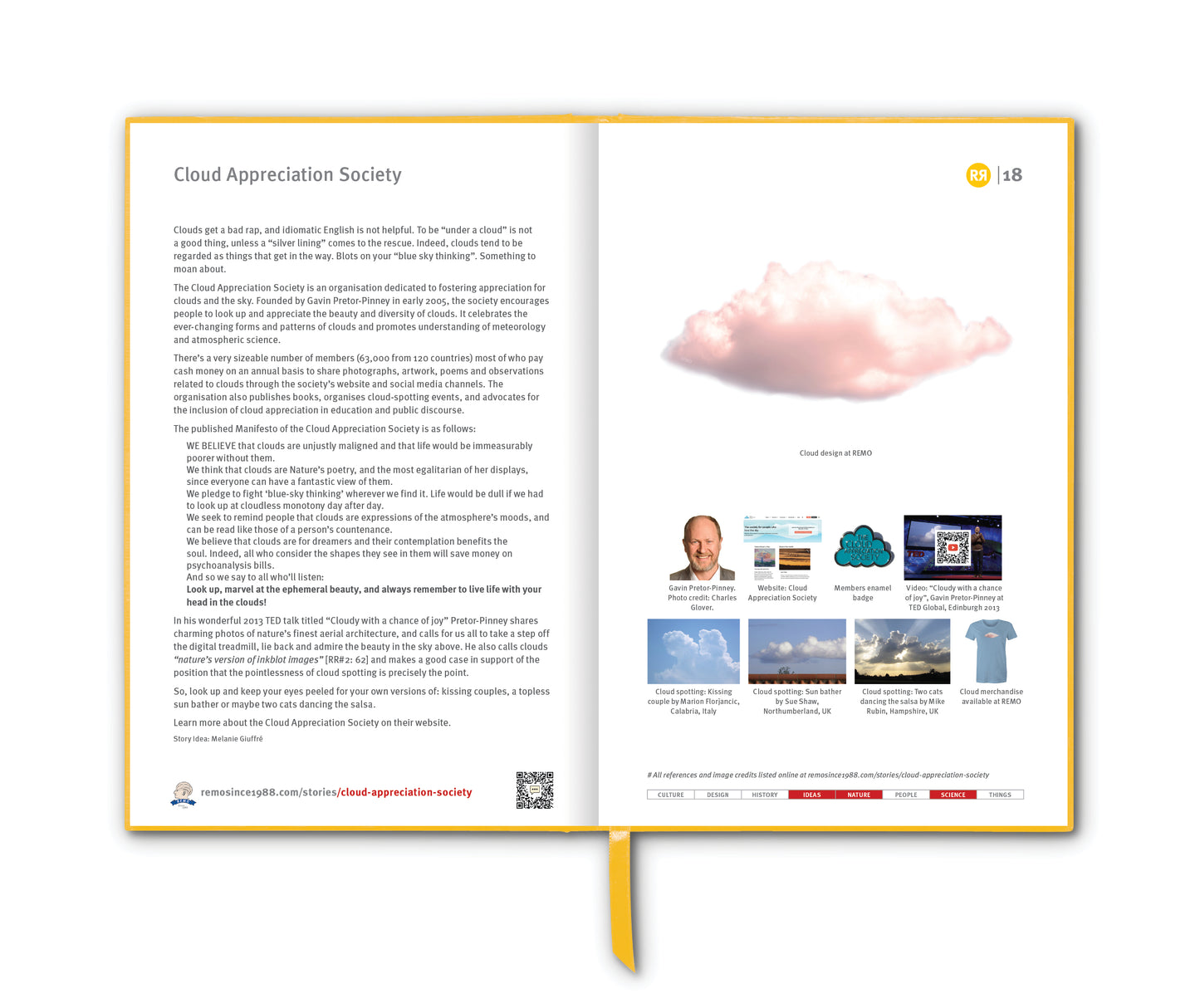

These algorithms track user behaviour and preferences (e.g. searches, likes and clicks) to deliver content that aligns with existing interests, views and beliefs. Over time, this results in a “bubble” of information that reinforces current worldview, while filtering out opposing perspectives.

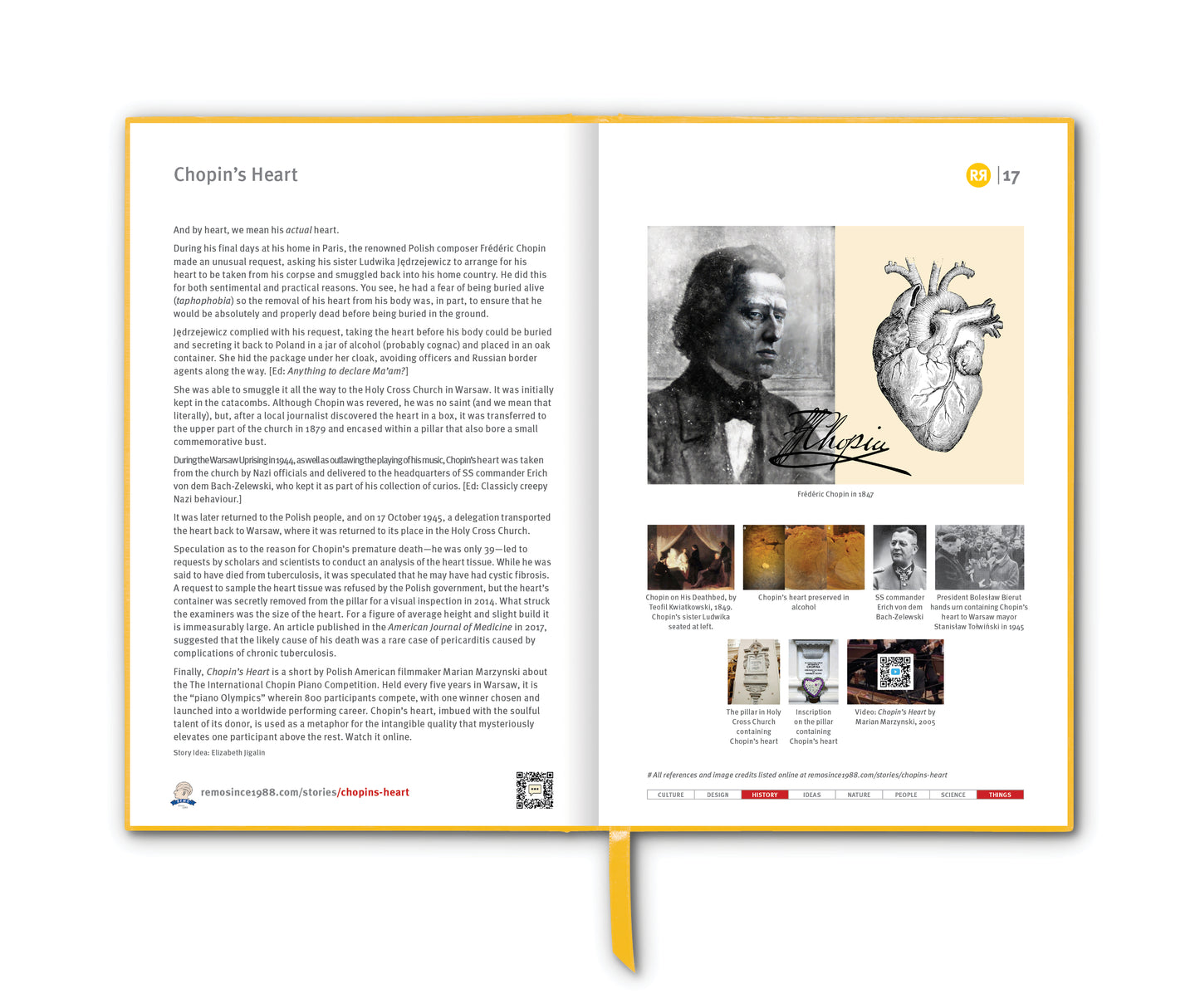

Online democracy advocate Eli Pariser popularised the term in his 2011 book The Filter Bubble, and his TED talk delivered in that same year gave many examples that are still relevant today. Watch that short talk HERE.

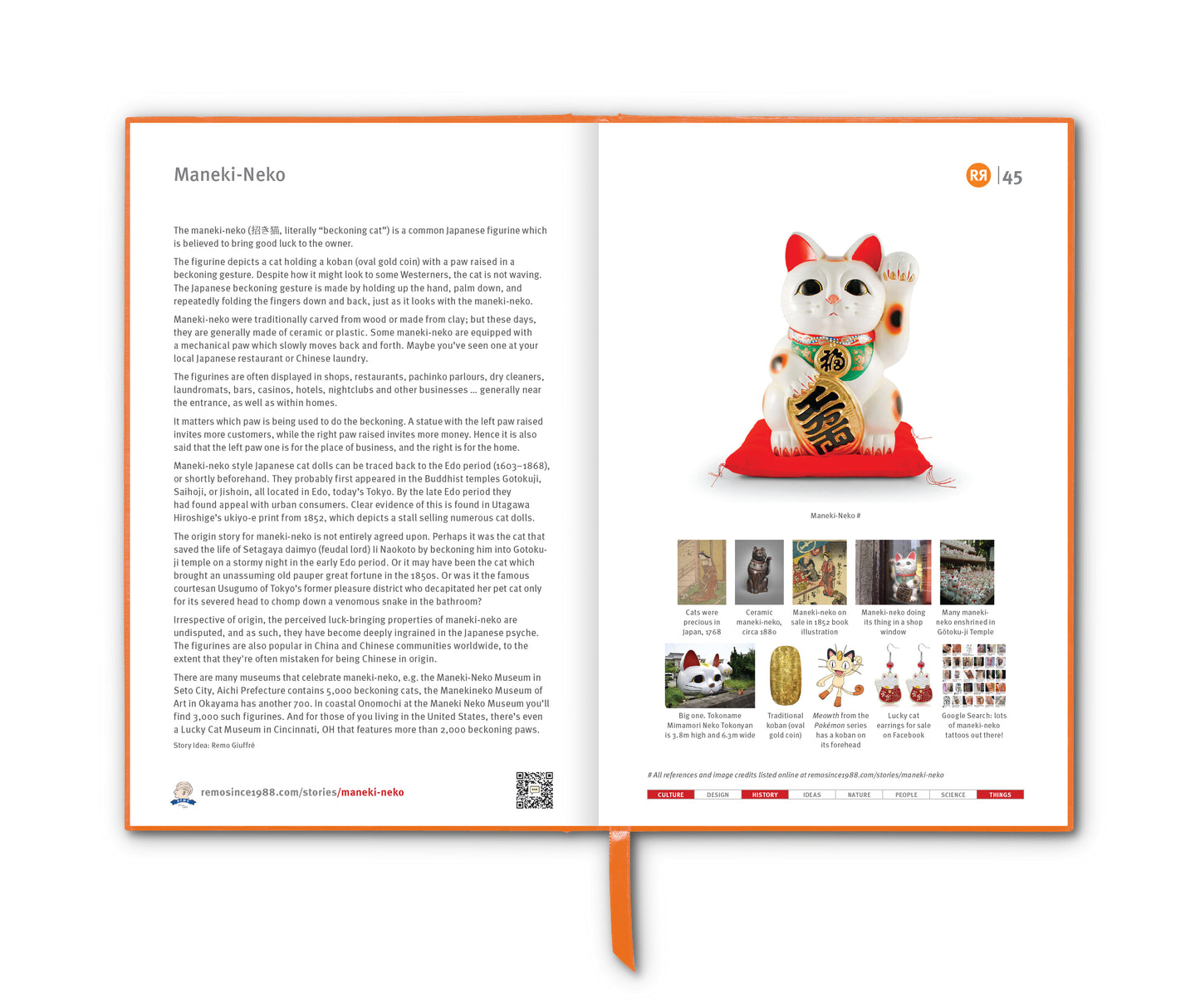

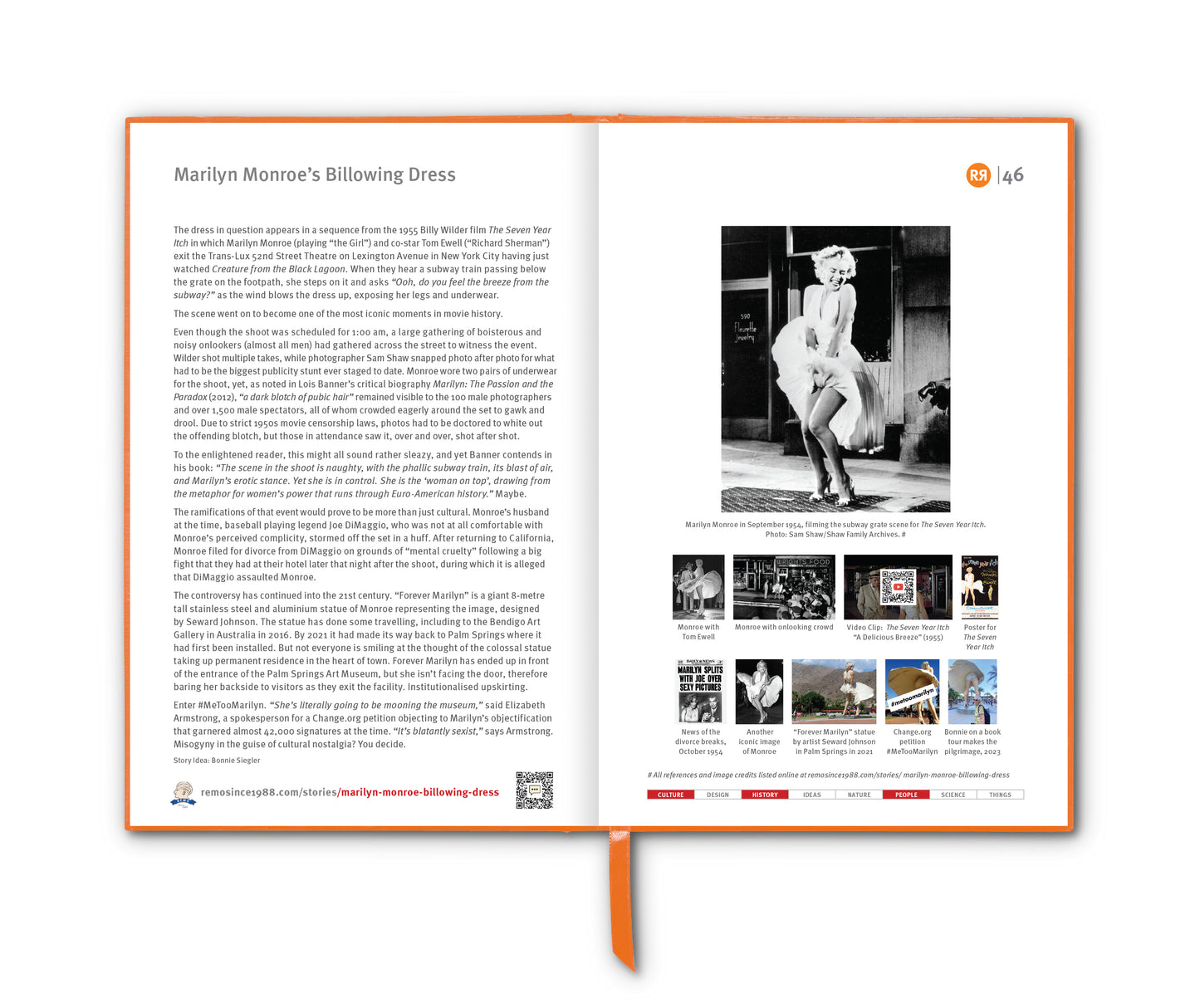

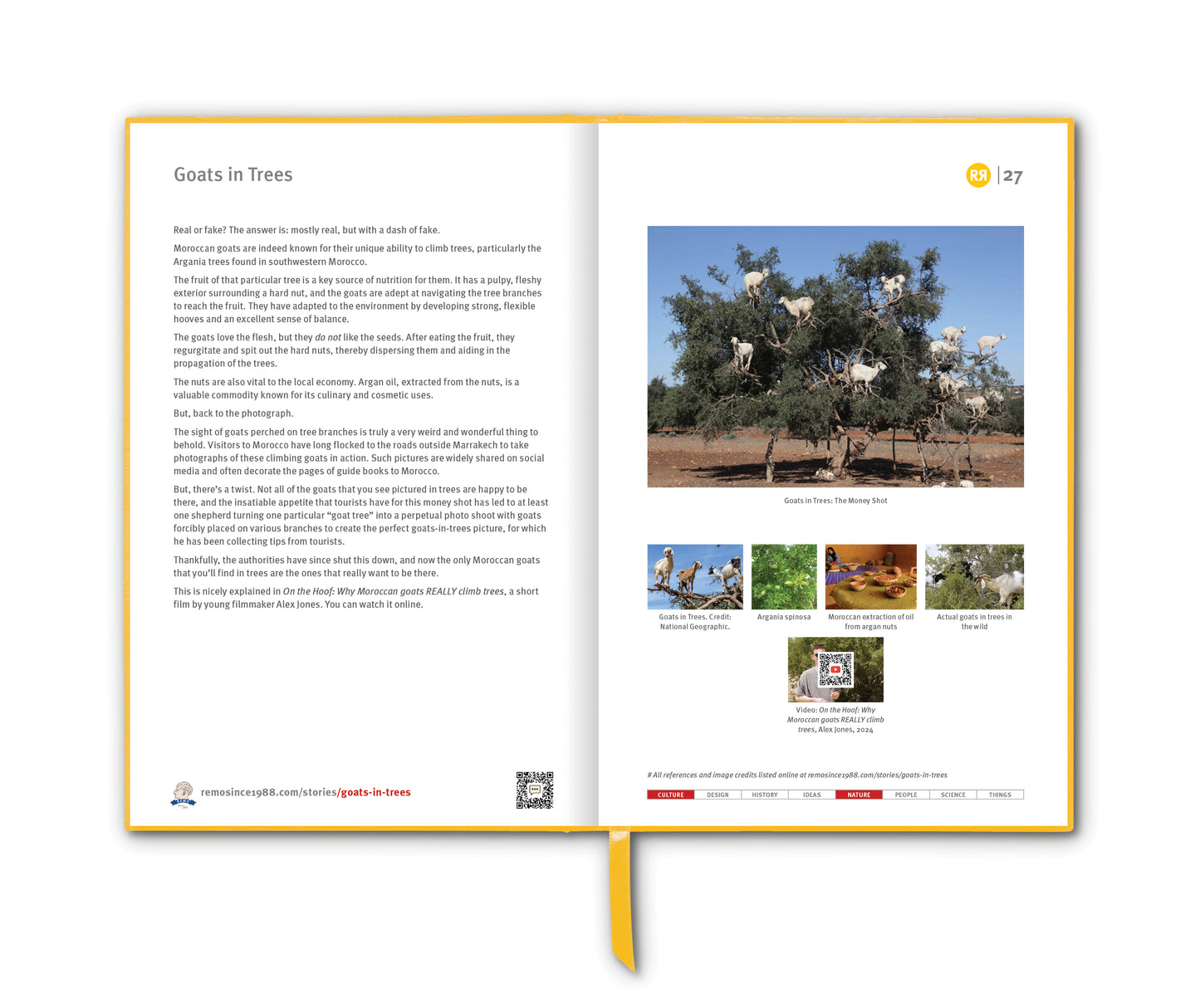

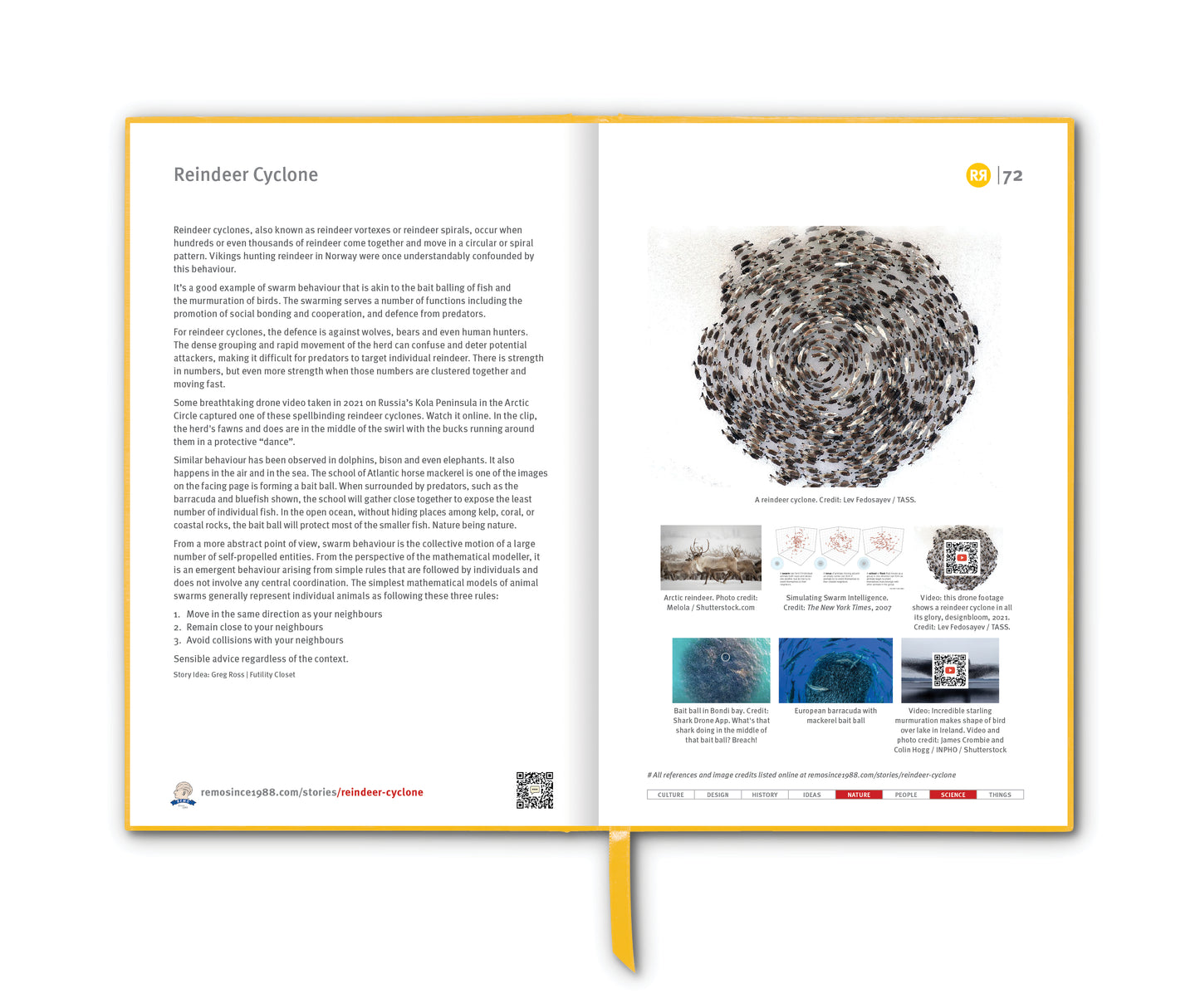

Confirmation bias is the psychological tendency of individuals to favour information that confirms their pre-existing beliefs and to dismiss or undervalue information that contradicts them. The filter bubble exacerbates confirmation bias by continually presenting people with content that supports their views, making it easier for people to stay in their comfort zones and less likely to encounter information that challenges their opinions. The bubble becomes an "echo chamber" (another phrase that is often used in this context). Opinions become amplified as they are repeated and reinforced by like-minded individuals, making it difficult for alternative ideas to break through.

Pariser argued powerfully that this will ultimately prove to be bad for us as a society and bad for democracy, and guess what … he was right; just look at the state of the US political scene. The rise of AI-driven personalisation has exacerbated the formation of filter bubbles, and the “Balkanisation” of the Internet continues to contribute to the social fragmentation that challenges civilised debate and many of our democratic institutions today.

So, what are we to do?

There are a few things that we can do to avoid filter bubbles and echo chambers:

-

Be aware of the filter bubble effect. Try to diversify your sources of information.

-

Be critical of the content you consume. Don’t just accept everything you see or hear as fact.

-

Be engaged with people who have different points of view.

Being exposed to different views, including false ones, need not come at the expense of truth. As argued by David Coady from the Department of Philosophy at the University of Tasmania in a March 2024 piece for Educational Theory:

“False beliefs are bad (at least presumptively), but false reports (or false testimony) are quite another matter. They are only bad if, and to the extent that, they lead to false beliefs. It should go without saying that exposure to different views, including false views, is fundamental to democracy and to educating the kind of free critical thinkers our politics so badly needs.”

Postscript

There’s a reason why REMORANDOM is optimised as a physical book series. It goes without saying that a printed book doesn’t know who you are. You don’t need to be logged in. So, the radically randomised REMORANDOM content takes people away from their algorithms, exposing them to things that they weren’t necessarily looking for … thereby nurturing serendipity and the stimulation of fresh thinking. At least, that’s the theory. What do you think?

_____________________________

References

wikipedia.org/wiki/Filter_bubble

medium.com/data-and-beyond/how-filter-bubbles-are-biasing-your-opinions-on-social-media

onlinelibrary.wiley.com/doi/10.1111/edth.12620

technologyreview.com/2018/08/22/140661/this-is-what-filter-bubbles-actually-look-like/

Images

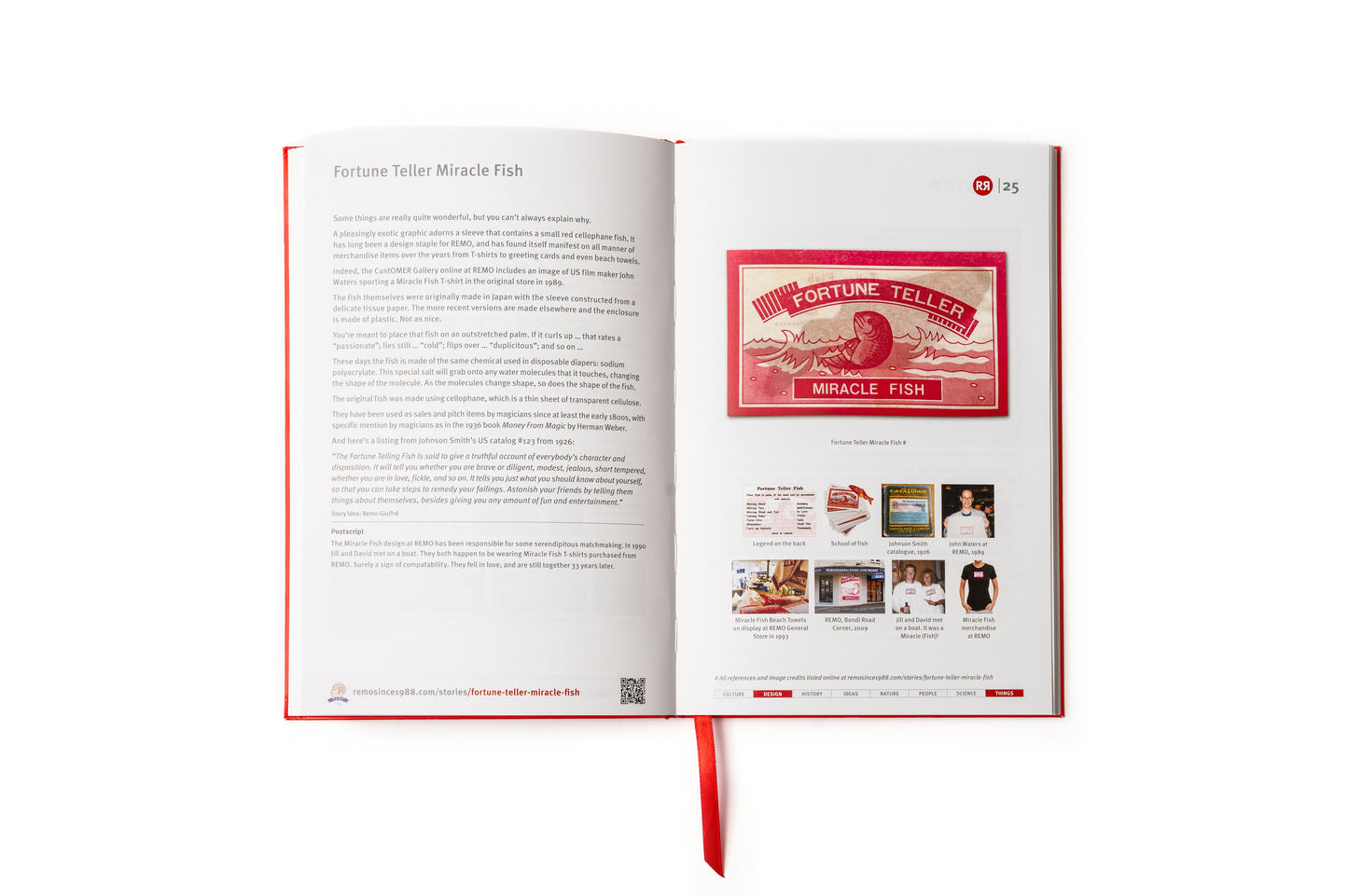

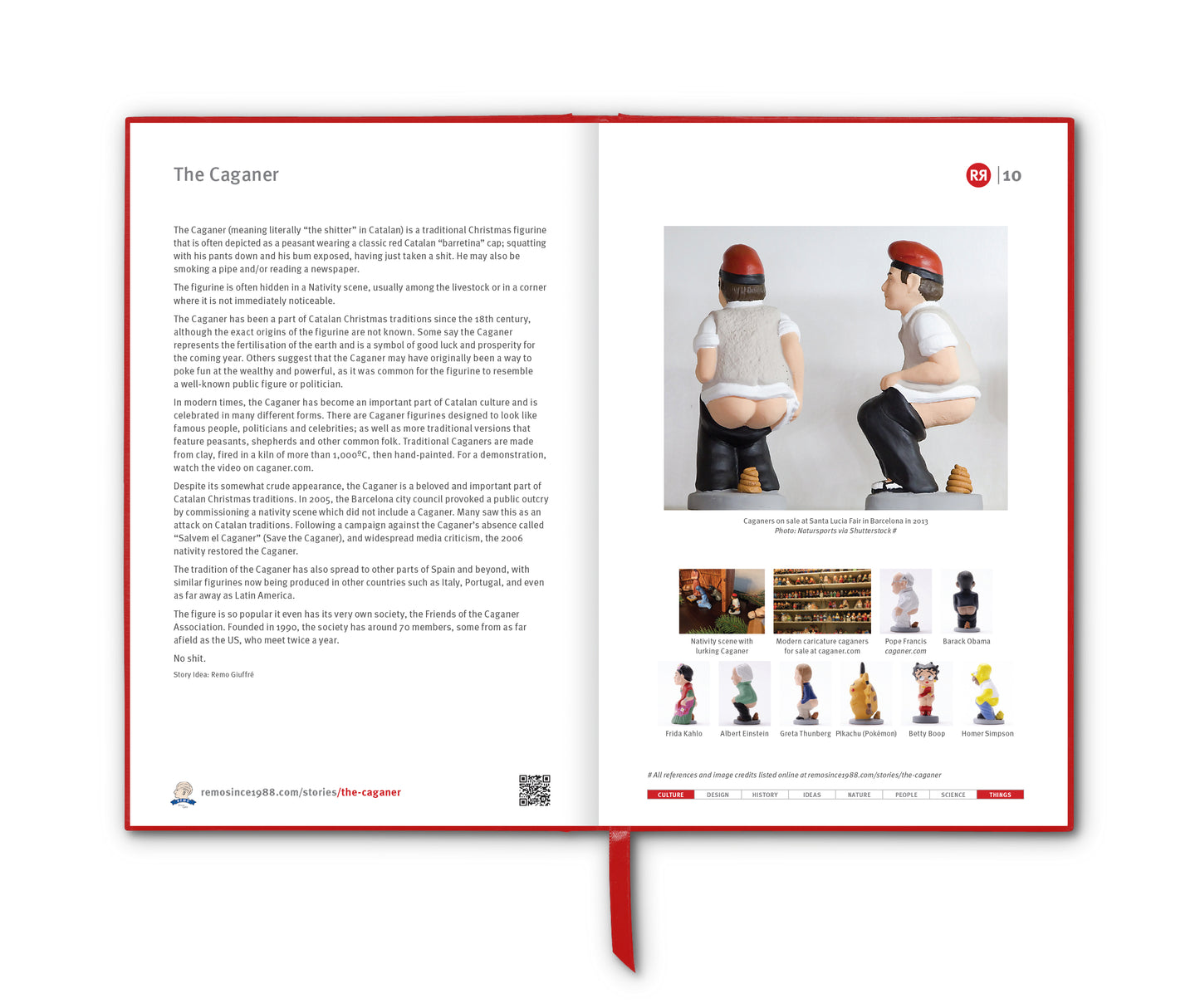

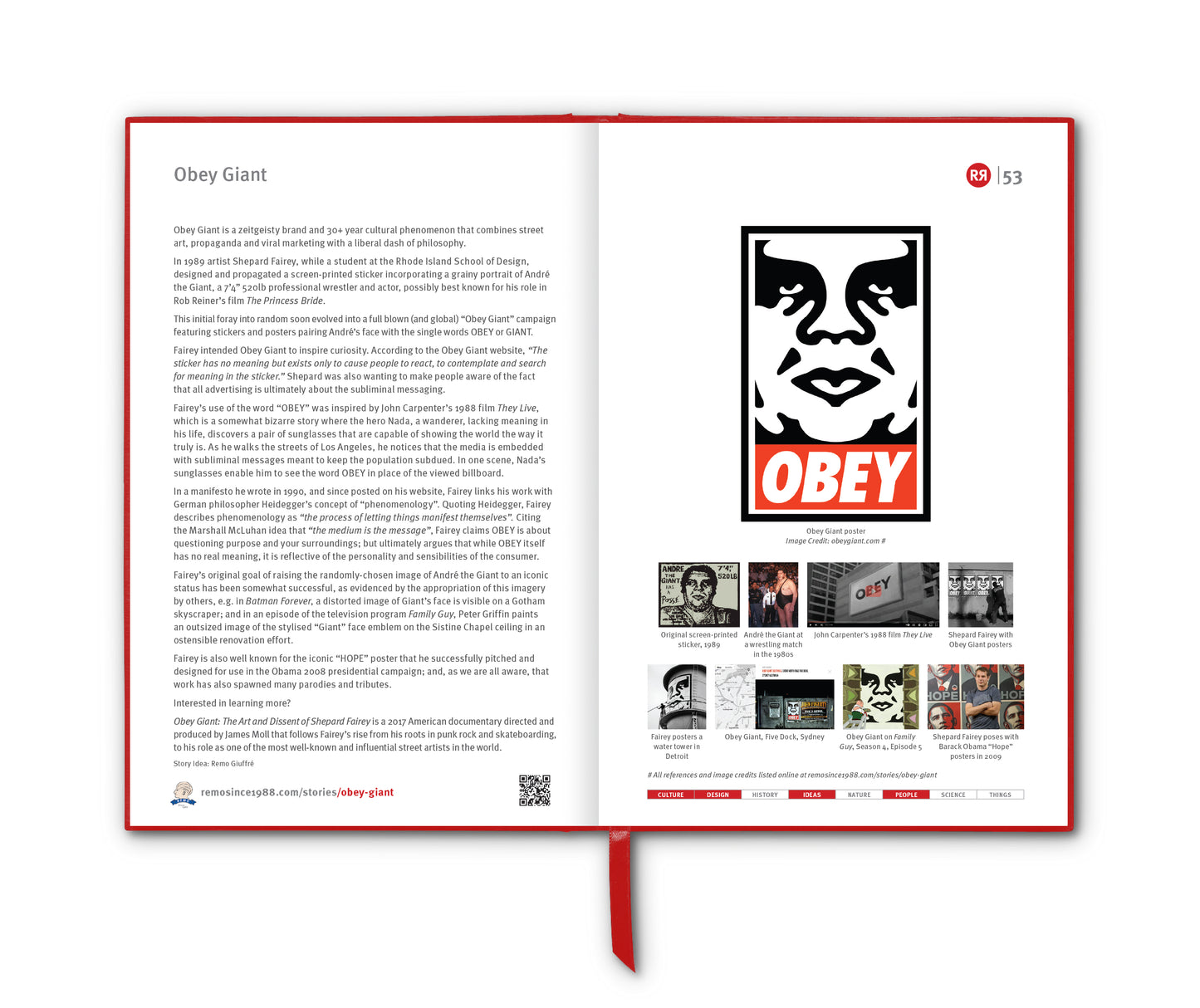

1. You in your filter bubble, not seeing the circles outside. Credit: Eli Pariser, The Filter Bubble, 2011

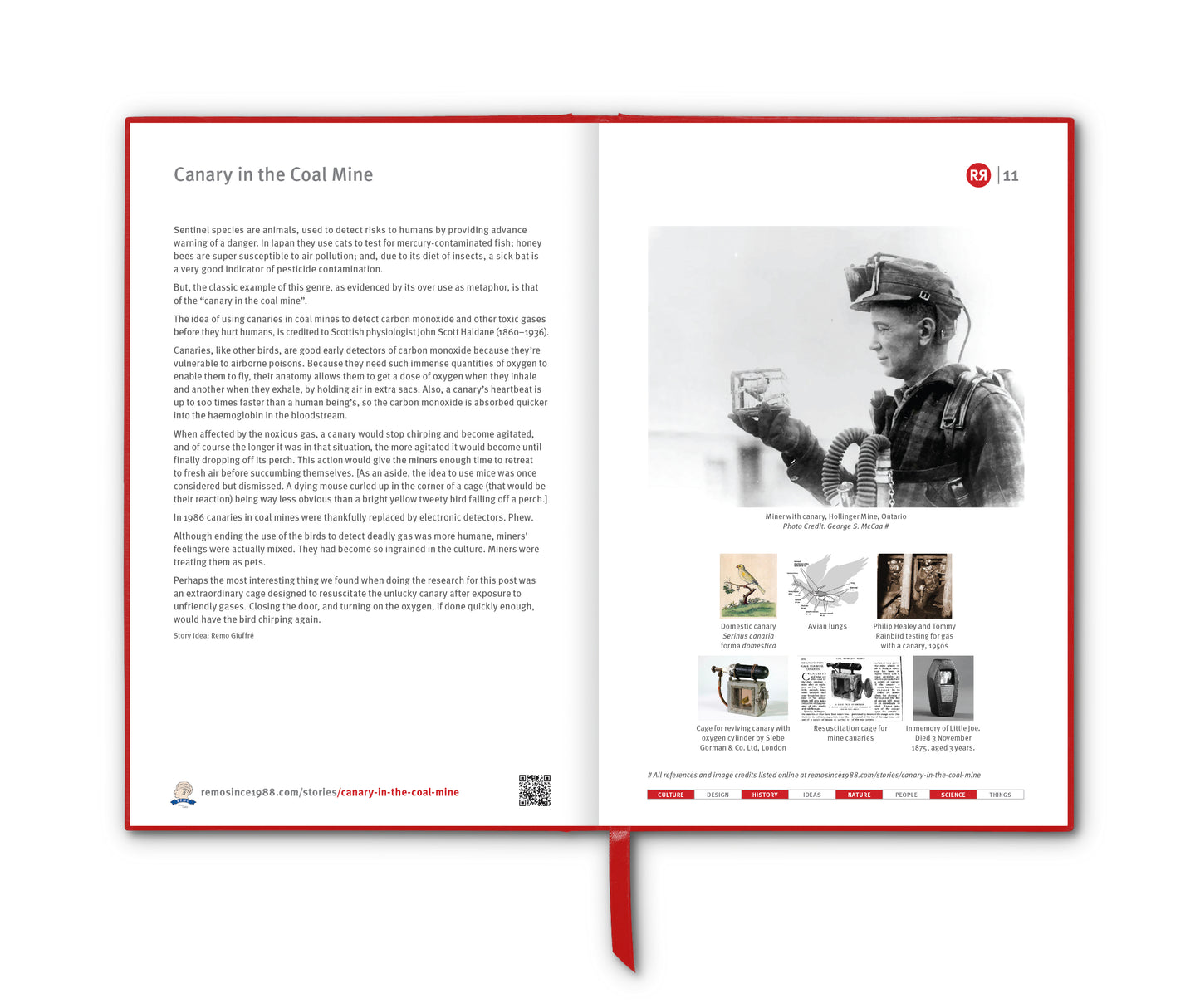

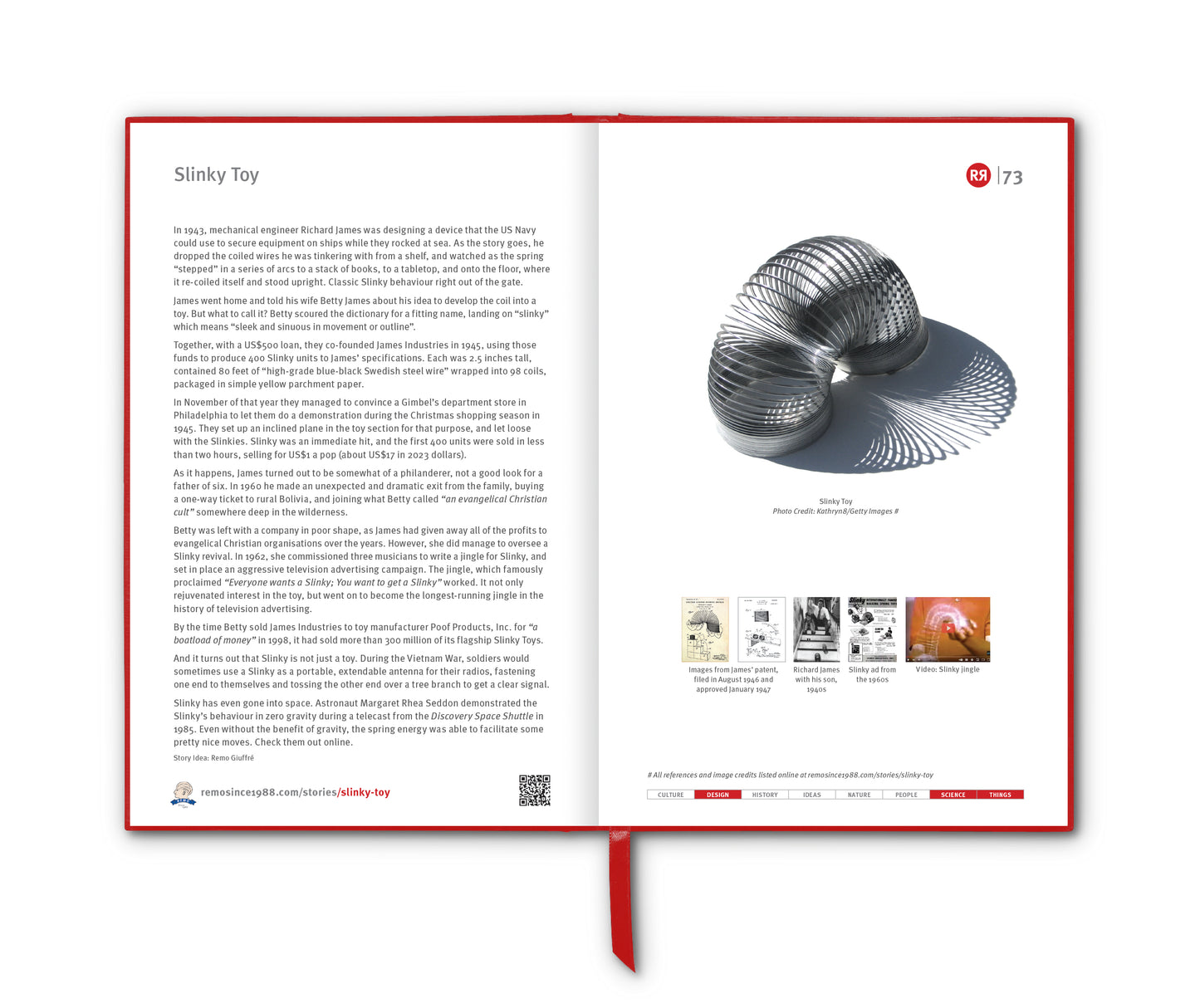

2. Our algorithmic gatekeepers. Credit: Eli Pariser, TED 2011

3. Video: Beware online "filter bubbles", Eli Pariser, TED 2011

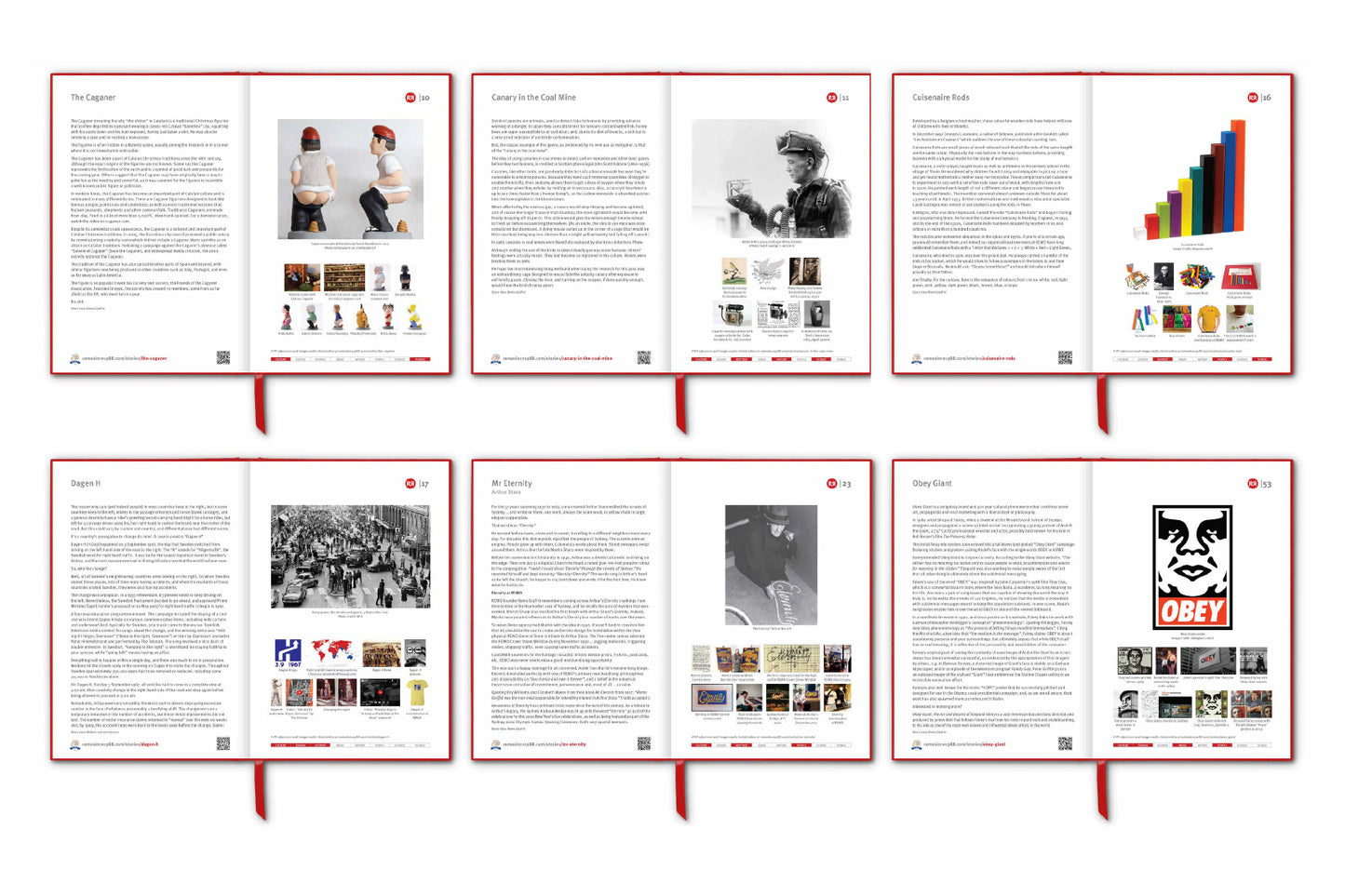

4. Confirmation bias Venn diagram. Credit: weareteachers.com

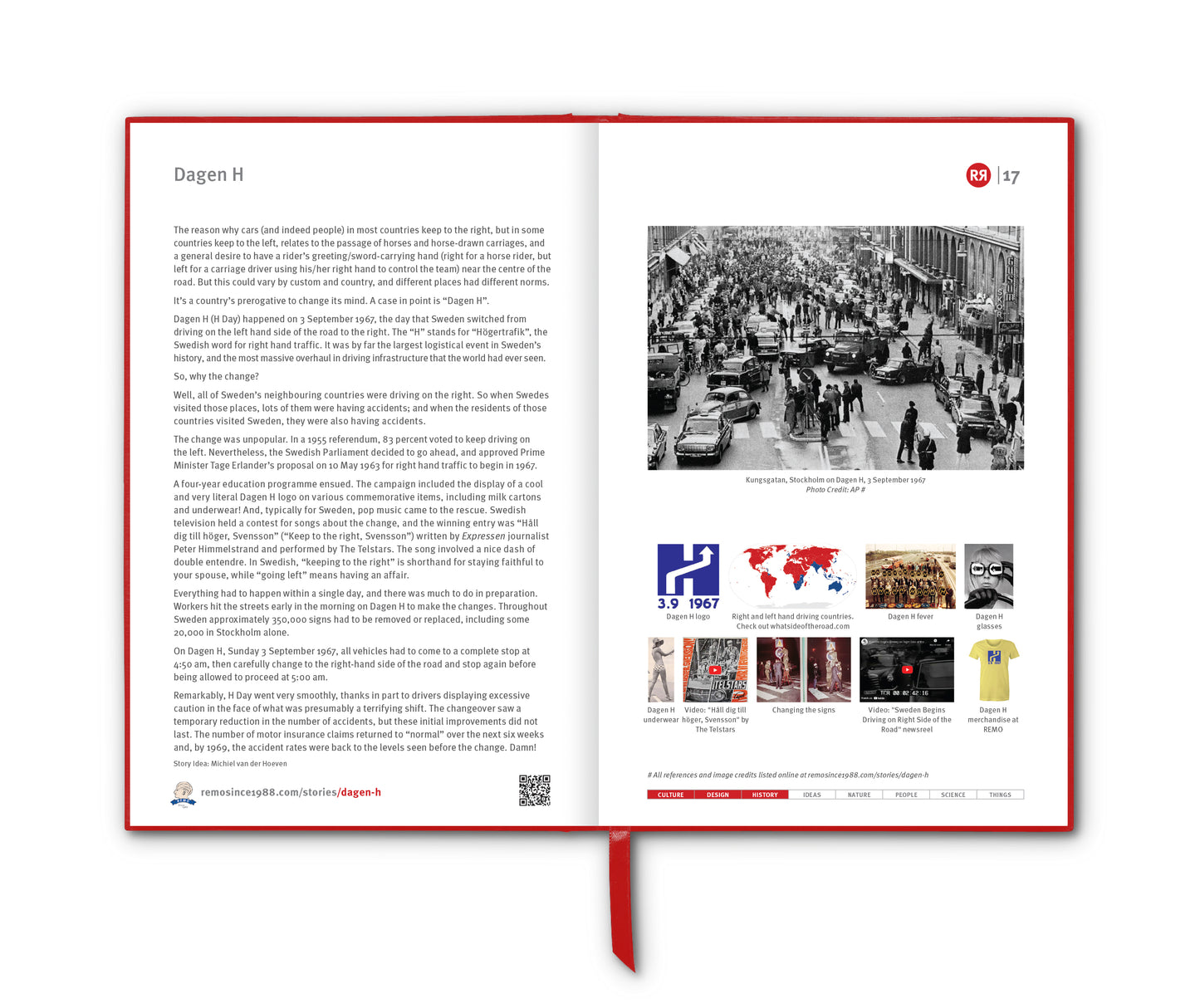

5. Map from scholars at Harvard’s Berkman Klein Center and MIT’s Media Lab, based on “co-citations” (i.e. who links to whom), shows that the media world is bifurcated.

6. A printed REMORANDOM doesn’t know who you are. Reg Lynch and Nerissa Lea getting fresh ideas at home in Tasmania in 2024.